SUBSTRATE CLOUD

Sovereign AI Cloud for EuropeRun, scale, and secure every stage of the AI lifecycle from training to inference inside EU jurisdiction. Substrate Cloud delivers the performance of a hyperscaler with the compliance of a sovereign operator.

Purpose-Built for AI

Every layer of Substrate Cloud is optimized for AI workloads and enterprise orchestration. Customers gain instant access to scalable compute, persistent storage, and managed Kubernetes environments all protected by Substrate governance.

Core Capabilities

Compute

- AI-optimized compute powered by NVIDIA’s H100, H200, and next-generation Blackwell, GB200, and Rubin architectures – NVIDIA H100, H200, Blackwell, GB200, Rubin.

- Bare-metal or virtual nodes.

- Elastic scaling via API or Kubernetes.

Storage

- NVMe + S3 hybrid with replication & snapshotting.

- Immutable (WORM) storage for compliance.

- Multi-region replication inside the EU.

Networking

- InfiniBand + GPUDirect for high-throughput.

- Private VPCs, VPN & direct interconnects.

- Edge delivery points across EU regions.

Security

- ENS High | ISO 27001 | GDPR | SOC 2 (in progress).

- AES-256, BYOK/HSM, TLS 1.3.

- DDoS + WAAP mitigation.

Regions & Architecture

Substrate Cloud operates from sovereign facilities in Spain and across Europe, with additional global regions available for performance and proximity in Spain and across the EU with extended availability in global regions for low-latency workloads and extended EU regions for redundancy and low latency. All infrastructure is governed by Substrate under EU law, ensuring that data never leaves European jurisdiction. Customers can choose dedicated sovereign capacity or elastic regional compute for scalability.

Observability & Governance

Transparent monitoring, real-time billing, and compliance dashboards keep you in control. 99.99% SLA availability with predictive alerts and automated incident response.

Hybrid & Multi-Cloud Integration

Connect Substrate Cloud to existing on-prem or multi-cloud environments through private links and federated identity. Maintain data residency while extending compute capacity globally.

IDEAL FOR

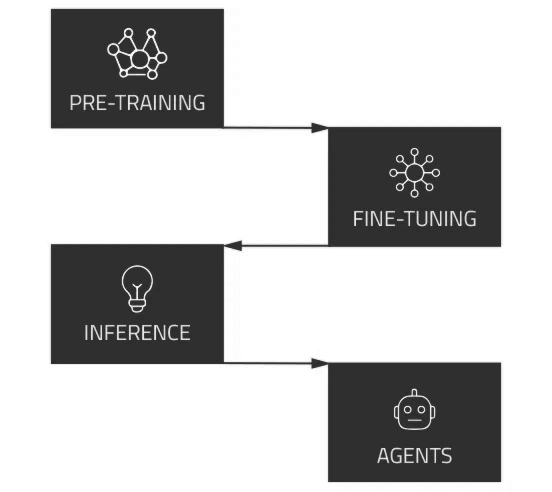

- LLM training and fine-tuning

- Inference and serving at scale

- RAG and agent workloads

- Data-sovereign MLOps pipelines

- Federated research and public-sector AI